MiniMax-M1 AI: The 2025 Review of the First Open-Source Hybrid Model

Imagine a world where artificial intelligence can understand and reason as clearly as a human. The MiniMax-M1 AI is making that dream a reality with its revolutionary hybrid attention model. In this article, we're going to explore everything you need to know about this groundbreaking technology, including what it is, its features, pros and cons, pricing, who it's ideal for, how to use it, and much more!

Key Takeaways

- MiniMax-M1 AI is an innovative artificial intelligence system that enhances user experience through advanced features.

- It utilizes a Hybrid Attention Mechanism and a Mixture of Experts (MoE) to improve its performance.

- The AI supports long context usage, making it suitable for applications requiring extensive information processing.

- MiniMax-M1 AI is designed for efficient computing, allowing users to maximize their productivity.

- Pros include its advanced technology and flexibility, while cons may involve higher complexity and cost.

- Pricing details are competitive, making it accessible for various users.

- Ideal for professionals, developers, and businesses looking for robust AI solutions.

- Getting started with MiniMax-M1 AI is straightforward and user-friendly.

What is MiniMax-M1 AI?

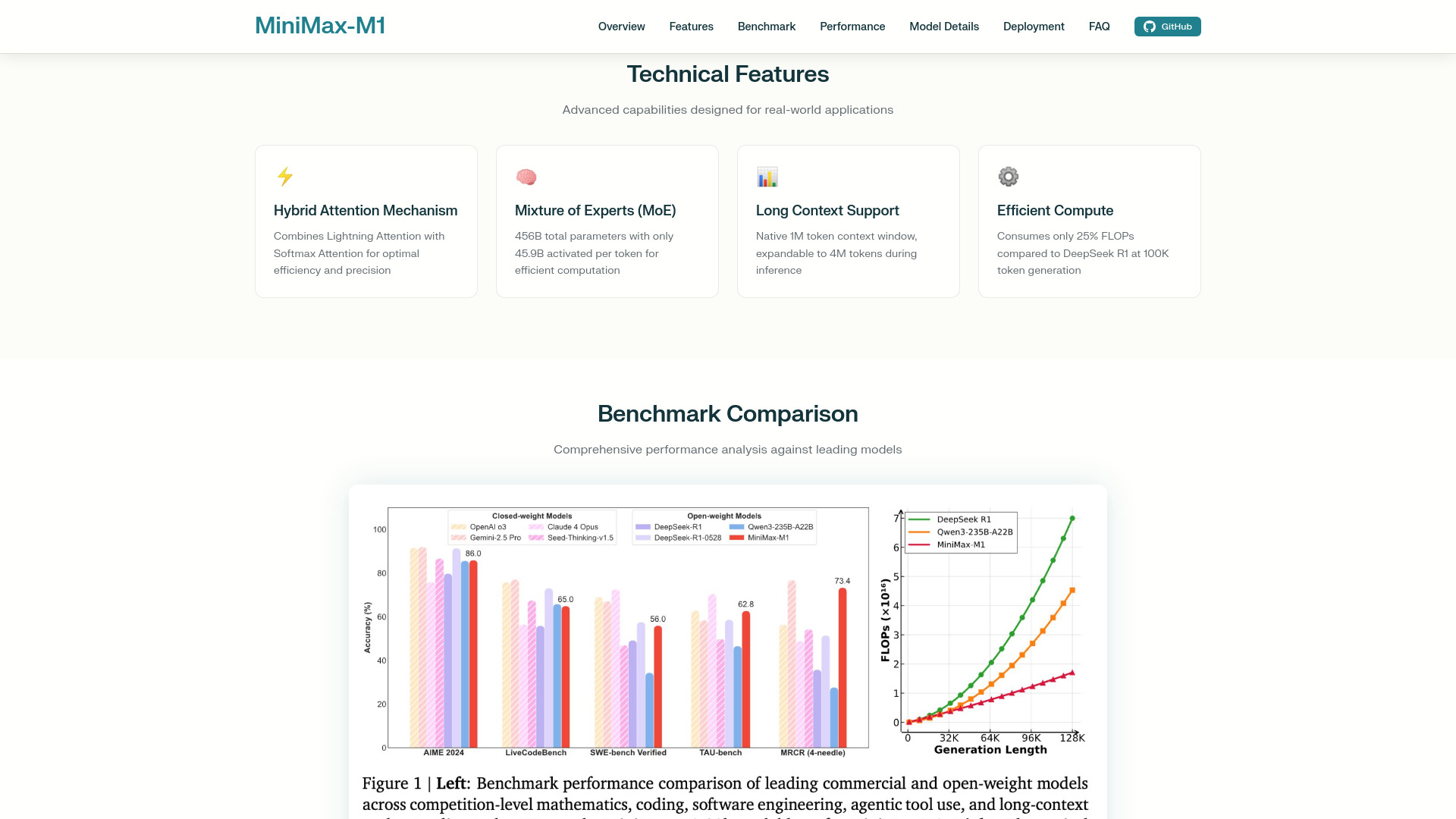

MiniMax-M1 AI is the world's first fully open-source hybrid attention reasoning AI model. Developed for groundbreaking performance, MiniMax-M1 brings together innovative technology and exceptional efficiency. It stands out because of its large 456 billion parameter architecture, its native ability to handle up to 1 million tokens in context (which is eight times the size of many competing models), and a compute cost that is 25% that of DeepSeek R1.

MiniMax-M1 AI uses a special hybrid attention mechanism. It combines Lightning Attention with traditional Softmax Attention, which helps the model balance speed and accuracy when thinking and answering tough questions.

Features

Hybrid Attention Mechanism

One of the most special parts about MiniMax-M1 AI is its hybrid attention mechanism.

"MiniMax-M1 AI's hybrid attention combines speed and smarts for the best results in real-world reasoning tasks."

Mixture of Experts (MoE)

MiniMax-M1 has a Mixture of Experts (MoE) setup with a huge 456 billion parameters.

"Being smart with power, MiniMax-M1 only uses what it needs, making it efficient and eco-friendly."

Long Context Support

The MiniMax-M1 model naturally supports a 1 million token context window.

"A 1M token window means MiniMax-M1 can keep a lot more in mind at the same time, outpacing many other AIs."

Efficient Compute

Unlike other large models, MiniMax-M1 AI only uses about 25% of the compute power (called FLOPs) that other models like DeepSeek R1 need.

"Save power and time—MiniMax-M1 is built to deliver great results with much less computing muscle."

Pros and Cons

Pros of MiniMax-M1 AI

| Pros | Details |

|---|---|

| Open Source under Apache 2.0 | Fully free to use, modify, and distribute for both research and commercial purposes. |

| Industry-Leading Context Length | Handles up to 1M tokens natively, which is 8x larger than DeepSeek R1 and many competitors. |

| Massive Model Power | Boasts 456B parameters for complex reasoning—but only 45.9B are activated per token for top efficiency. |

| Hybrid Attention Mechanism | Merges Lightning Attention with Softmax Attention for a perfect balance of speed and smarts. |

| Efficient Computation | At just 25% of the compute requirements (FLOPs) compared to DeepSeek R1 for large token generation. |

Cons of MiniMax-M1 AI

| Cons | Details |

|---|---|

| High Hardware Requirements | Managing a model with 456B parameters can need significant computing power, especially for large tasks. |

| May Require Technical Know-How | Deployment (such as using vLLM or Transformers) can be complex without prior AI or engineering experience. |

| Learning Curve for New Users | Beginners may need extra time to understand concepts like hybrid attention and Mixture of Experts. |

Pricing

When it comes to the pricing of the MiniMax-M1 AI, there's great news for everyone interested in using or exploring this powerful model. MiniMax-M1 is released completely open-source under the Apache 2.0 license.

Who is MiniMax-M1 AI For?

MiniMax-M1 AI is built for anyone who needs powerful, efficient, and open-source AI solutions.

- Researchers who want to push the boundaries of AI reasoning and long-context understanding.

- Developers aiming to build new applications using an efficient, scalable, and open-source model.

- Businesses looking to integrate high-performance AI into their products while saving on computational costs.

- Students and learners exploring artificial intelligence and the latest AI trends.

- Open-source enthusiasts who want to collaborate and innovate with the community.

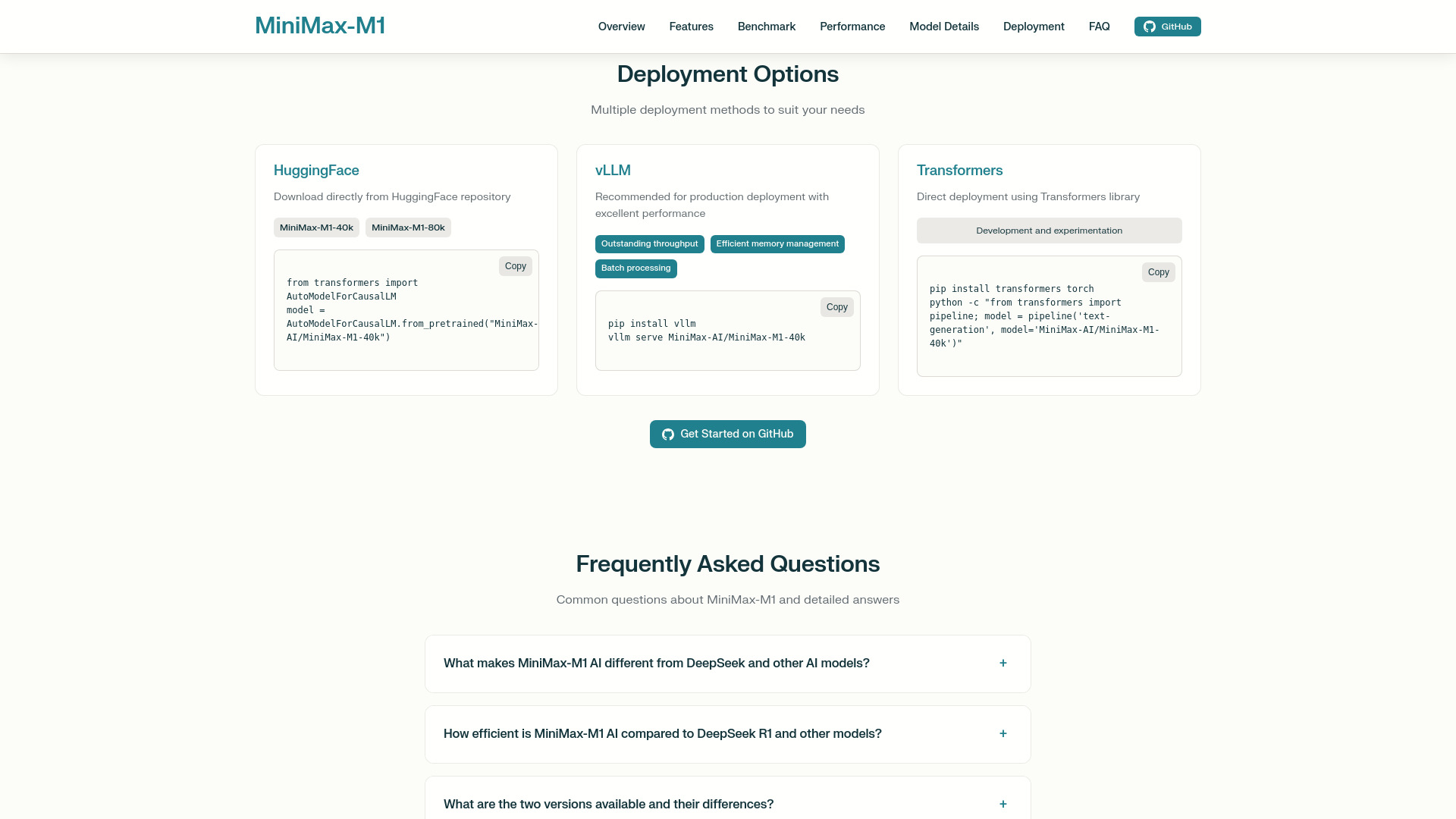

How to Use MiniMax-M1 AI

Getting started with MiniMax-M1 AI is simple, whether you're a beginner or a professional.

- HuggingFace Deployment: Download the MiniMax-M1-40k or MiniMax-M1-80k directly from the official HuggingFace repository.

- vLLM for Production: For the best performance, especially in production environments, use vLLM.

- Transformers Library: Perfect for development and testing, the Transformers library allows direct deployment and experimentation.

- Online Chatbot: If you want to try out MiniMax-M1 instantly without any setup, visit the online chatbot at chat.minimaxi.com.

Conclusion

In summary, MiniMax-M1 AI stands out as a truly remarkable innovation in the world of open-source AI. With its revolutionary hybrid attention architecture, enormous context window, and highly efficient computation, MiniMax-M1 sets new industry standards.

"MiniMax-M1 delivers both performance and accessibility, lowering the barrier for developers and researchers worldwide to build, test, and deploy advanced AI solutions."

Whether you are a student, developer, researcher, or business innovator, MiniMax-M1 AI opens up countless possibilities.